If sociologist Cristobal Young were to put out a lawn sign, it wouldn’t proclaim the popular “Believe science.” Instead, it would carry a more nuanced message: “Advance the highest standards of rigor in science.”

“We live in a time when dubious claims are everywhere – not just in politics or social media but also in scientific research,” said Young, associate professor of sociology in the College of Arts and Sciences (A&S). “Every year, a vast amount of research is published, but many individual studies aren’t as rigorous or compelling as they seem. Some findings gain attention not because they’re the strongest evidence, but because they’re flashy, novel, or confirm what people want to believe.”

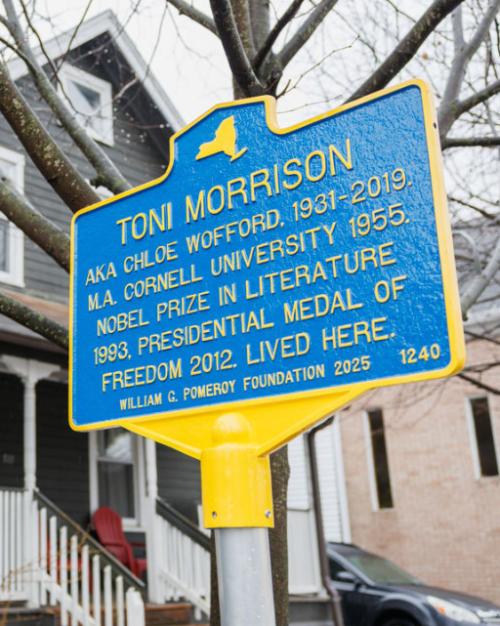

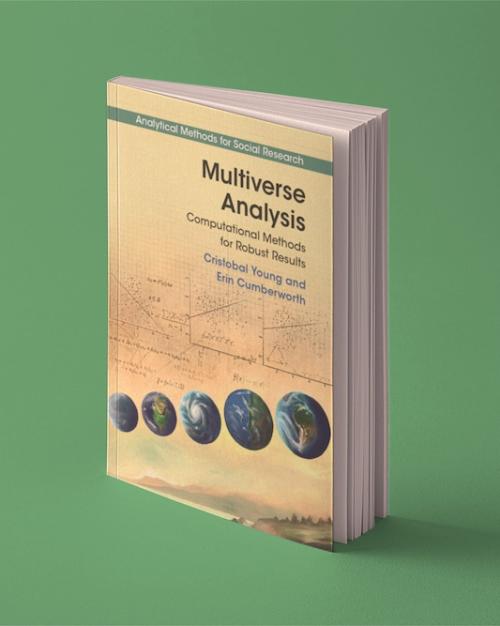

To cut through misinformation, noise and fragile claims, Young has written a book calling social science researchers to the highest standards of evidence through “multiverse analysis,” an approach which reveals the full range of estimates the data can support. In “Multiverse Analysis: Computational Methods for Robust Results,” published in March by Cambridge University Press, Young and co-author Erin Cumberworth, sociology researcher (A&S), explain the roots of their view – physics’ concept of the multiverse – and provide a step-by-step guide.

The Chronicle spoke with Young about the book.

Question: Why is the concept of the multiverse from physics a fitting term to apply in social science research?

Answer: In physics, the multiverse refers to parallel universes – alternate realities where things unfold differently. Social science research can be understood in a similar way: The findings we see depend on a series of analytical choices, many of which are uncertain or debatable.

Research is a garden of forking paths: Every study involves dozens of decisions, such as how to define variables, which controls to use, what statistical model to run. Statistical theory provides only rough guidance, and reasonable researchers could make different choices. Yet most research papers present only one polished version of the analysis, even though alternative choices could lead to different conclusions. I call these “single path” studies because they only reveal one outcome from a much larger decision tree.

A multiverse analysis brings those hidden possibilities to light. Instead of asking, “What if we had analyzed the data differently?” we can rerun the analysis 100 or 1,000 different ways, varying reasonable choices and visualize all those results.

The multiverse concept reminds us that research doesn’t exist in a single, predetermined reality – it exists in many possible analytical worlds.

Q: How has the rise of computational power changed the landscape of empirical research?

A: It has created a credibility crisis in research. Analysts today can run hundreds or even thousands of statistical models, fine-tuning their work until they narrow in on their favorite results. But readers only ever see the small handful of findings that researchers choose to present. This creates a problem of asymmetric information: Analysts know far more about how sensitive and reliable their results are than readers do.

Decades ago, when calculations had to be done by hand, calculator or early computers, estimating a statistical model was labor-intensive and slow. Researchers typically ran one analysis, not because they thought it was the only valid one, but because they didn’t have the time or resources to explore alternatives.

That constraint created a kind of equality between analysts and readers. Both had access to the same single result, and any alternative models were purely hypothetical.

Now, with near-unlimited computational power, that equality has disappeared. Analysts can explore vast numbers of models, testing different decisions behind the scenes, but – if they want – only showing readers their favorite or most impressive results. This growing gap between analyst and reader, where one side knows far more than the other, is a fundamental challenge for the credibility of modern empirical research.

Our goal is to use computational power to inform the reader, not just the analyst.

Q: How has multiverse analysis made a difference in recent research projects?

A: The book examines many empirical cases, and multiverse analysis reveals strikingly different patterns. Some findings are extremely robust; no matter how you run the analysis, the results remain the same. Others are highly fragile, where only one in 100 models shows a significant effect. In both cases, you often see a plausible theory paired with statistically significant results. The difference is that one finding holds up across many reasonable analytical choices, while the other does not.

The most interesting cases are those with mixed robustness, where half the models show an effect while the other half do not. In these situations, conclusions often hinge on very specific analytical choices: one or two control variables, a particular way of coding a key variable, or another specific analytical decision. These cases often point to the need for further investigation.

Multiverse analysis doesn’t just test findings, it helps uncover where deeper investigation is needed. It shifts the focus from simply reporting results to understanding how much those results depend on researcher choices.

Read the story in the Cornell Chronicle.