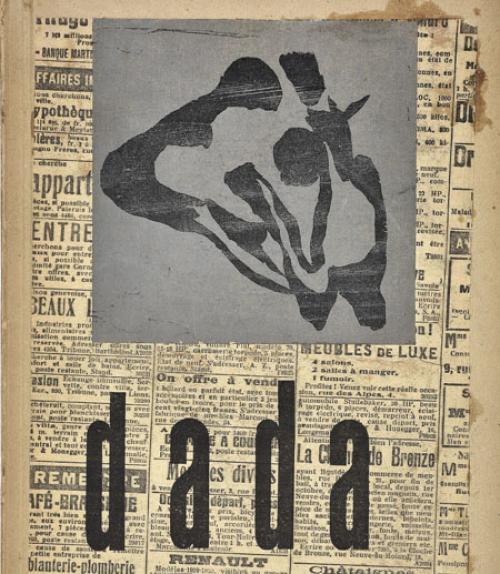

To make a Dadaist poem, artist Tristan Tzara once said, cut out each word of a newspaper article. Put the words into a bag and shake. Remove the words from the bag one at a time, and write them down in that order.

This “bag of words” method is not entirely different from how artificial intelligence algorithms identify words and images, breaking them down into components one step at a time. The similarity inspired Cornell researchers to explore whether an algorithm could be trained to differentiate digitized Dadaist journals from non-Dada avant-garde journals – a formidable task, given that many consider Dada inherently undefinable.

But the algorithm – a convolutional neural network typically used to identify common images – performed better than random. It correctly identified Dada journal pages 63 percent of the time and non-Dada pages 86 percent of the time.

“Our goal is not necessarily to get the ‘right’ answer, but rather to use computation to provide an alien, defamiliarized perspective,” the researchers wrote in “Computational Cut-Ups: The Influence of Dada,” which published in the Journal of Modern Periodical Studies in January. “Can a tool designed for identifying dogs be repurposed for exploring the avant-garde?”

They also sought to provide an example of how large collections of images might be analyzed, said Laure Thompson, a doctoral student in computer science, who co-authored the article with David Mimno, assistant professor of information science.

Text mining – searching large bodies of digitized text for certain words or phrases – has become widely used in the digital humanities, but searching for images is far more difficult.

“Text has very convenient features – they’re known as words. And we can see them really quickly because of the spaces between them,” Thompson said. “Whereas an image to a computer is just a large matrix of numbers, and that’s known to be not very meaningful.”

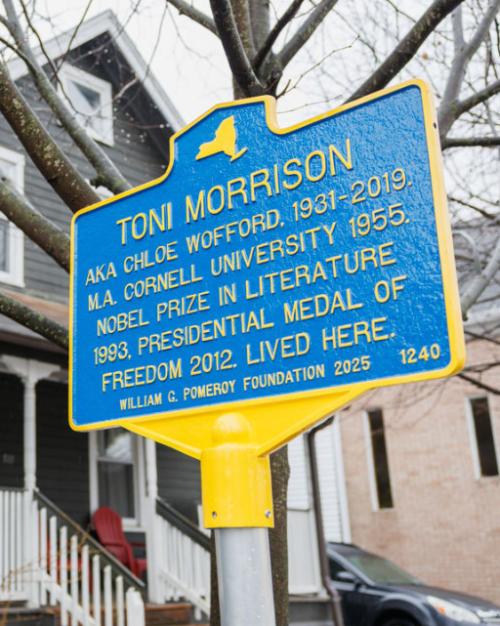

Thompson and Mimno trained their neural network on Dadaist journals from Princeton University’s Blue Mountain digital archive. Without knowing anything about Dada – an avant-garde movement that emerged in Europe after World War I that sought to upend materialism and convention – the algorithm then attempted to classify around 33,000 journal pages as either Dada or not-Dada.

The network learns to identify images through progressively more complex layers – early layers might spot simple structures like edges or right angles, while the final layer will attempt to label the image as, say, a sheepdog.

In this study, the model analyzed the next-to-last layer, which comprised a series of numbers rather than labels such as “sheepdog.” Mimno and Thompson referred to these numerical layers as “computational cut-ups,” a nod to the Dadaist “bag of words” concept.

The algorithm “may be almost the antithesis of art, but it’s also playing with all these methodologies that were appearing in Dada itself,” Thompson said.

Though they didn’t know how the algorithm made its decisions, the researchers worked backward from the results. They found that the network associated Dada with the color red, high contrast and prominent edges. It tended to classify pages with realistic images and photographs as not-Dada, they found.

Of the other genres the algorithm analyzed, it most frequently misidentified Cubism as Dada – which made sense to the researchers, as Cubism strongly influenced Dada art.

Before conducting the Dada experiment, the researchers tested their concept on pages containing music. The algorithm identified 67 percent of the 3,450 pages with musical scores as “music,” and 96 percent of the 55,007 pages without music as “not music.” They found the model tended to classify pages with neat, horizontal tables as music, and pages with color or images as “not music.”

“If you want to project feelings on to these models, they’re pretty lazy,” said Thompson. For example, researchers have found that if you train a model to identify images of fish, and all the images provided show people holding fish, it will probably classify all images with people holdings things as fish.

The model’s classifications shed some light on what characteristics may define Dada, the researchers said, even though the idea of using a machine to view art is simplistic and possibly absurd.

“This is partly a tongue-in-cheek effort. We’re not trying to be super-serious, that this classifier will beat all art historians at identifying what truly makes Dada Dada,” Thompson said. “The model knows nothing about Dada, but it can still help provide an additional perspective in thinking about it.”

The research was supported by the National Science Foundation and the Alfred P. Sloan Foundation.

This story also appeared in the Cornell Chronicle.