Two recently-hired faculty in the Department of Linguistics are expanding the use of computer modeling and experimental techniques as they forge new paths of research in the discipline.

Marten van Schijndel and Helena Aparicio, both assistant professors in the College of Arts & Sciences, study how humans perform the incredibly complicated task of understanding and processing language. Van Schijndel is also studying how successfully or unsuccessfully computers can replicate that process.

“The field of linguistics as a whole has moved lately to incorporate more computational methods and modeling as well as experimental data,” said Michael Weiss, professor of linguistics and chair of the department. “The appointments of professors Schijndel and Aparicio strengthen our excellence in computational linguistics and expand our experimental research. We are delighted to have added two dynamic and cutting-edge scholars to our department.”

Van Schijndel probes the linguistic processing of humans and of neural network language models using methodologies from psycholinguistics, comparing the predictions of computational models with human behavioral and neural responses. One project looks at the factors that might impact how people predict what comes next in a written sentence.

“We use measures of reading time and reaction time to see how people react online,” he said.

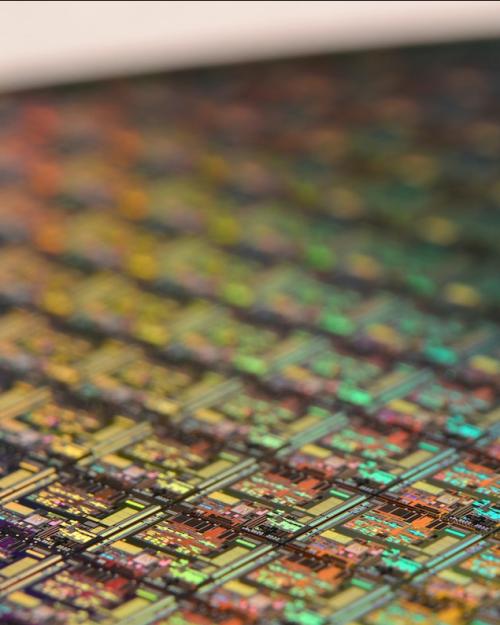

He also studies computational models such as neural networks that are being employed to do language processing online.

“There is so much hype behind a lot of these AI systems,” he said. “But people think they are further along than they are. There is room for incorporating psychology and linguistics into these models to try to improve them and make them more robust.”

For example, neural networks are trained using giant data sets like Wikipedia, which helps them predict the next word that might come in a sentence given the words that come before and the context. But these neural networks can break down because they don’t really understand the external factors involved in language and communication, Van Schijndel said.

One of his recent papers demonstrated that while models can predict when people will be confused by a sentence, they can’t predict how confused they will be. “The confusion is in their heads not in the text, so you can’t train a model on that,” he said. “Clearly there are other things happening in language acquisition that are not represented in the data.”

His group is studying how they might be able to use the information on comprehension they’ve gained from their reading and reaction tests to better train neural networks.

Van Schijndel teaches a course on Computational Linguistics, which focuses on building tools that learn the foundations of language and finding ways to systematically quantify them. He also teaches Natural Language Processing in the Cornell Ann S. Bowers College of Computing and Information Science, which focuses on neural networks, and a follow-up course, Computational Linguistics II, which focuses on how we can understand what neural networks are doing.

Aparicio's research focuses on understanding what aspects of linguistic meaning are hard-coded, as opposed to derived during linguistic interactions.

“We want to understand how humans assign meaning to linguistic strings or sentences,” Aparicio said. “We’re not only considering the semantics of the sentences themselves, but also the context in which you are having the exchange, the identity of your (conversation partner), your world knowledge and other factors.

“We try to decide how much of the semantic information goes into the final message and how much comes from all of these social aspects of linguistic interactions.”

As part of her research, Aparicio conducts experiments with subjects across the U.S. through online platforms. Her latest work explores how adaptable humans are when it comes to understanding what their interlocutor is saying.

For example, when someone says that John is tall, a listener could envision John as a 7-foot, 3-inch basketball player or as someone who is just a little bit taller than normal. As a listener, you need to infer what that person’s threshold of tallness is from whatever limited information is available, so it’s hard or even impossible to arrive at a precise understanding. As comprehenders, we are constantly faced with the task of inferring under varying degrees of uncertainty what the speaker meant given what was said.

In a current project, Aparicio investigates how listeners perform these inferences when they have a different internal representation than the speaker of what, for instance, being tall means.

Her work exposes people to information in advance of the conversation that could shift their threshold to understand other people’s use of vague words, such as “tall,” “few” or “many.” During the experiment, they might be asked to listen to conversations, view videos or read text and answer questions. Aparicio collects her data through crowd-sourcing platforms.

“The advantage of crowd-sourcing our experiments is that we can work with people from very different demographic platforms,” she said. “We can have data from hundreds of people in a matter of hours.”

After she receives results, Aparicio uses computational models and methods as tools to tease apart possible interpretations of the data.

Aparicio’s work draws from cognitive science, psychology, computer science and behavioral data. Though she’s interested in basic research, her work could have many applications, including improving human-computer interactions.