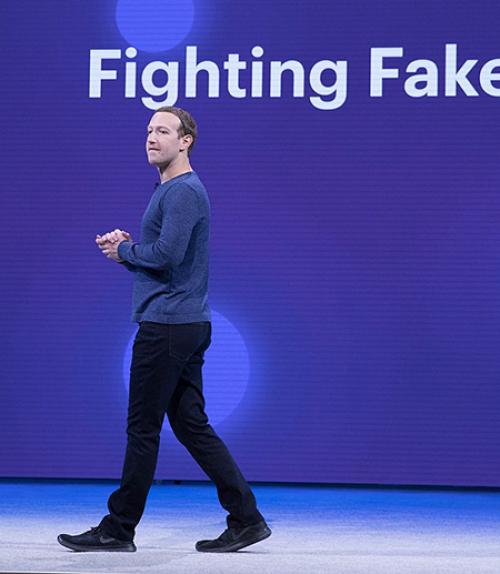

Facebook CEO Mark Zuckerberg and Twitter CEO Jack Dorsey are testifying before the Senate Judiciary Committee on Tuesday about actions their companies have taken to stem the spread of misinformation in the lead up to and following the U.S. election.

Alexandra Cirone is professor of government at Cornell University who teaches a course on post-truth politics. Cirone studies the spread of fake news and disinformation online and says the 2020 election unveiled the dangerous potential for domestic actors to spread lies.

“The 2020 election demonstrated that disinformation is here to stay, and has the potential to play a dangerous role in U.S. politics. But while fake news in 2016 was about foreign interference in domestic elections, 2020 showed us that social media can be a platform for domestic actors to spread lies and conspiracy theories.

“Over the past two weeks, Twitter and Facebook were working overtime to remove election misinformation from their platforms and police users, but this still wasn’t enough.

“On Facebook, there was a serious issue with the rise of public groups and page networks coordinating around false claims of electoral fraud. These began as groups forming to discuss the election, but quickly grew to include conspiracy theories and QAnon content, and eventually calling for violence. The ‘Stop the Steal’ group and hashtag, and the copycat groups that sprung out of this movement, amassed a few hundred thousand followers in just a few days. Facebook page and group algorithms also aid this exponential spread, by suggesting ‘related’ content to users.

“But some social media users are even exiting mainstream platforms and moving to other more extreme platforms such as Parler, an ‘unbiased social media network’ with less content moderation, that is rife with misinformation. Other right wing media outlets, such as Newsmax, and social media apps are seeing a rise in usage. If mainstream social media platforms are losing customers, this might make these companies slow to internally police during a time when it’s clear we need more regulation.

“Given what’s happened in the 2020 Election, it will be interesting to see to what extent a Biden administration will pursue more active regulation of social media companies.”

---

William Schmidt is a professor of operations, technology and information management at the Cornell SC Johnson College of Business. Schmidt and colleagues at the University of California, Berkley, and the University College of London recently developed a machine learning tool to identify false information early and help policymakers and platforms regulate malicious content before it spreads.

“The line between tackling false information and censorship is gray, and where that grayness stops and starts is different for different societies. Social media companies face challenges because they operate in different cultures and must balance different societal norms.

“However, social media firms generate an incredible amount of revenue, and have expended relatively little effort toward addressing a problem that their platforms have intensified. The leaders of those companies should respond to tough questions on this issue. They should recognize their ownership of the problems that their Pandora’s boxes have unleashed. When you move fast and break things, you must assume responsibility for what you have broken.

“A critical first step is early identification of the sources of false information. Research by Cornell University and UCL shows that machine learning tools can successfully identify false information web domains before they begin operations and amplify their message through social media.”

For media inquiries, contact Linda Glaser, news & media relations manager, lbg37@cornell.edu, 607-255-8942

Photo by Anthony Quintano/ Creative Commons license 2.0.